Implementation of the Matrix::multiply method for GPU-accelerated matrix multiplication. More...

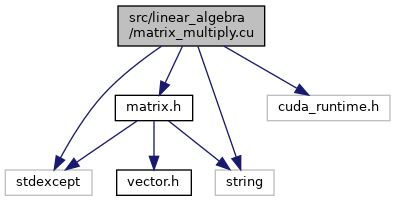

Include dependency graph for matrix_multiply.cu:

Go to the source code of this file.

Functions | |

| __global__ void | matrixMultiplyKernel (const double *a, const double *b, double *c, int m, int n, int k) |

| CUDA kernel for matrix multiplication. More... | |

Detailed Description

Implementation of the Matrix::multiply method for GPU-accelerated matrix multiplication.

Definition in file matrix_multiply.cu.

Function Documentation

◆ matrixMultiplyKernel()

| __global__ void matrixMultiplyKernel | ( | const double * | a, |

| const double * | b, | ||

| double * | c, | ||

| int | m, | ||

| int | n, | ||

| int | k | ||

| ) |

CUDA kernel for matrix multiplication.

- Parameters

-

a Pointer to the first input matrix data. b Pointer to the second input matrix data. c Pointer to the output matrix data. m Number of rows in matrix A. n Number of columns in matrix A / rows in matrix B. k Number of columns in matrix B.

Definition at line 20 of file matrix_multiply.cu.